Whenever we create a new communications technology, we try new ways of telling stories with it.

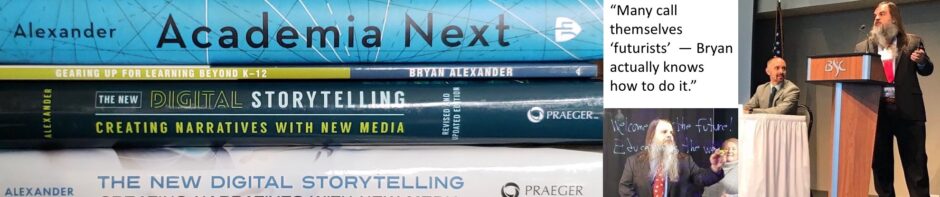

That’s one of my long-running contentions. I made it in digital storytelling workshops and my first book (ABC-CLIO; Amazon). My thinking is that humans are deeply invested in stories, and we are also capable of a great deal of creativity with technologies.

This is how I viewed a fascinating article about the so-called #AICinema movement. Benj Edwards describes this nascent current and interviews one of its practitioners, Julie Wieland. It’s a great example of people creating small stories using tech – in this case, generative AI, specifically the image creator Midjourney.

The short version is a creator crafts detailed prompts for the AI, selects the best results, edits them to some degree in Photoshop, aligns the best in a series, then adds a title which sets up a plot. For example, here’s “la dolce vita” by Wieland, each image in sequence:

The story is clear: a girl dreaming of sweets, then heading to a store, where she finds a charming candy seller. It’s not an epic, being just four images with a lone line of text, but does present an evident narrative arc.

It reminds me of the Flickr group narrative photo project “Tell a story in 5 frames (Visual story telling),” which started in 2004. People shared stories which consisted of, as you might suppose, five images plus a title. And sharing was the point – check Alan Levine’s writeup for the deeply social nature of the project.

This practice also reminds me of comics of all kinds. Dialogue and text boxes would make it even more so, assuming either people add them manually via Photoshop or generative AI improves to the point of making legible text within images.

I haven’t mentioned movies, and that seems to be key to this new effort. Wieland pursued her experiment in part to create what she saw as higher quality images, as seen in movies, as the Ars interview discusses:

Ars: What inspired you to create AI-generated film stills?

Wieland: It started out with dabbling in DALL-E when I finally got my access from being on the waitlist for a few weeks. To be honest, I don’t like the “painted astronaut dog in space” aesthetic too much that was very popular in the summer of 2022, so I wanted to test what else is out there in the AI universe. I thought that photography and movie stills would be really hard to nail, but I found ways to get good results

Yet these are not images in isolation. Their connection, their sequencing is where the stories emerge. The model here is motion pictures:

[S]ome artists began creating multiple AI-generated images with the same theme—and they did it using a wide, film-like aspect ratio. They strung them together to tell a story and posted them on Twitter with the hashtag #aicinema. Due to technological limitations, the images didn’t move (yet), but the group of pictures gave the aesthetic impression that they all came from the same film.

The fun part is that these films don’t exist.

In a sense these are artifacts of nonexisting movies, or the first steps towards films to come. For now they are short stories based on AI-generated images.

Here’s another example, an untitled quartet:

When I look at these four images in a row, without the guidance of a title or other text stories emerge in my mind. We’re in a town witnessing an explosion, and we see a young woman react, then an older man and child. Perhaps the man and child drove the car to the last image’s vantage point, or maybe the car belongs to some other character who died? fled? sleeps now in the vehicle?

Other viewers had more open-ended responses to this grouping, or rather questions. Here’s a Twitter exchange between one viewer and Wieland:

These are stunning Julie. What story were ypu trying to tell in these? 🙌

— Webster ⚡ (@WebsterMugavazi) April 2, 2023

These are stunning Julie. What story were ypu [sic] trying to tell in these? 🙌

sometimes you just gotta watch the world burn, I guess 💥🔥

Again, as with that Flickr group, digital storytelling is about conversation and interpretation.

To be honest, I’m not comfortable with the cinema label here, at least not yet. I can see the rhetorical strategy of claiming film as an aesthetic ideal for AI-generated images, especially in contrast to the more cartoonish output of, say, Craiyon. There’s also the resonance of claiming high art (“cinema,” not “movies” or cartoons).

For now, I think of these as comics, comic strips in particular. Or what Alan and I called Web 2.0 storytelling, back in the day. Definitely multimedia stories. And the generative image tools make it easier for a lot of people to make them.

Where does this head next? If these AI tools keep improving (Midjourney is now in a fresh and more powerful update) and if people have access to them (they are free for now; only Italy has banned them at a general level) we should expect more people to create more such comic/cinema stories, just at a quantitative level. The genie is out of the bottle and we are story creatures, after all.

On a qualitative level we might anticipate a flourishing of formal experimentation. The examples I cite here have four images; why not three or twenty-three? The above instances are linear in structure; how about crafting multilinear or interactive tales? Think of the vast history of comic books, stretching back more than a century, or the centuries of sequential art. There are so, so many options.

Then cinema might come in, starting at a basic level. Imagine an AI-backed flipbook creator, where you can start off with some parameters (my friend Ruben walking along a stormy beach) and the tool generates a slew of individual, iterated frames with a common situation and small differences between each, plus a title card at the start or end. You can print the frames on paper and create a classic flipbook, or let the AI animate them into a short video or .gif. Next up, adding subtitles and audio tracks. After that, competing animation offerings, each with its own style, affordances, and connections to other platforms. (This blossoming of different, divergent, competing AI tools is what I’ve been expecting.) This expansion of features combined with growing file sizes drives the nascent art form towards tv episodes then feature film length.

As with any storytelling project or information technology this art form should also elicit criticism, even hostility. Some will charge users for not making “real” art, since they’re relying on large language models instead of actually drawing images or taking photos themselves. (Cf the critiques of the electric guitar or DJing.) Others will critique the form for complicity with the owners of the technologies and their bad practices: training bots on the work of uncompensated artists, forcing viewers into certain platforms, perhaps arrogating ownership of the results, and so on. Another critique might stem from supporters of other art forms, seeing #AICinema as unfair or illegitimate competition along economic, aesthetic, and/or political grounds. Imagine what might occur when people start making provocative or explicitly political stories.

…or #AICinema might become a minor, niche art form, unthreatening and uncommercialized, like interactive fiction is today. The history of storytelling includes culture-transforming forms, but also ones which never crack the big time.

I need to pause here because I have to work on other projects with deadlines, including my Universities on Fire book tour and interviews. Have you seen any other instances of AI storytelling? Or have you made any yourselves?

EDITED TO ADD: I shared this story on Twitter, as is my custom, and both Wieland and Edwards responded most kindly. Then one John Polacek chimed in, introducing us to his “AI movie generator” Botlywood. He fed “la dolce vita” into the app and generated a film treatment, complete with cast and multi-act plot summary.

Fascinating, I signed up for Botly and quickly (as in <5 minutes) created a heist movie description, The Heist of the Unforeseen.

This app reminds me of a fun 2005 computer game, The Movies, where the player runs a movie studio and can create a series of movie ideas, among other things.

It turns out Polacek just launched Botly now. So AI-generated digital storytelling seems to be moving very quickly indeed.

I’m reminded once again that humans have a persistent habit of filling the new communication medium with the old medium’s content. This is not a criticism – just and observation that shows that our creativity in the present is limited to the visual and semantic linguistics of the past.

This relates to a brief article by Rosenbaum et al. “Play, Chat, Date, Learn, and Suffer Merton’s Law of Unintended Consequences and Digital Technology Failures.” [ https://www.academia.edu/51146401/Play_Chat_Date_Learn_and_Suffer_Mertons_Law_of_Unintended_Consequences_and_Digital_Technology_Failures ] that extends Robert K. Merton’s Law of Unintended Consequences (1936) [ https://www.econlib.org/library/Enc/UnintendedConsequences.html ]

The implications are that we cannot know in advance how any new medium will emerge in its own unique way with its own “grammar” and subsequently impact social relationships (among other things). Neither Zuckerberg or Jack Dorsey, in their formative days, could have imagined the unintended effect of their platforms on the integrity of democracy itself.

Thus, The Law of Unintended Consequences ought to be a guidepost for anticipating adverse effects and for the proprietors of these technologies to seek counsel from a panel of experts. Of course, that’s never good for business, so my point is moot. 🙂

Thanks for digging this up been thinking through since you shared this. The images are stunning indeed, though I think there’s a lot of effort not fully detailed in the DALL-E out painting and Photoshopping that is more than typing in the craiyon box.

And what’s the leap from CGI for scenery and effects to the actors? Flawlessai hints at this next wave https://www.flawlessai.com/ though I wonder about its marketspesk claims of “Truthfull Storytelling”

They do work well for the 4 or 5 frame storytelling, now maybe they can be doled out for a new version of 5 card Nancy!

This could be fun for your Nancy.

I still think the flipbook is next.

Good stuff doc!

“Dialogue and text boxes would make it even more so, assuming either people add them manually via Photoshop or generative AI improves to the point of making legible text within images.” reminds me of a multi lingual Web Cartoon I’ve done with a cartoon creator in 2012. We needed 3 layers to do this: 1 for the drawn picture, 1 for the text box and 1 for the text. As texts are of different length in different languages, it also resulted in different sized text boyes. AI would have been a great help there (might still be online http://spacesleepsyndrome.com/index.php) Cheerio, Garth

Garth, that’s a great comic!

That was fun to see your twitter interplay with the Botlywood developer. I was not clear if just entering the same name for the “la dolce vita” example automatically grabbed the 3 images from the same named series by Julie Wieland.

I cant say I was all that impressed with the botlywood writing! It was all tell no show 😉 But its a concept.

Narratives are not necessarily neutral.

Here is the narrative soul of America in the shadows.

https://pluralistic.net/2023/04/10/declaration-of-interdependence/#solidarity-forever

Incredible pics, very atmospherical!

AI rocks !